SIXTY years after transistors were invented and nearly five decades since they were first integrated into silicon chips, the tiny on-off switches dubbed the ‘nerve cells’ of the information age are starting to show their age.

The devices — whose miniaturisation over time set in motion the race for faster, smaller and cheaper electronics — have been shrunk so much that the day is approaching when it will be physically impossible to make them even tinier.

Once chip makers can’t squeeze any more into the same-sized slice of silicon, the dramatic performance gains and cost reductions in computing over the years could suddenly slow. And the engine that’s driven the digital revolution — and modern economy — could grind to a halt. Even Gordon Moore, the Intel cofounder who famously predicted in 1965 that the number of transistors on a chip should double every two years, sees that the end is fast approaching — an outcome the chip industry is scrambling to avoid. “I can see (it lasting) another decade or so,” he said of the axiom now known as Moore’s Law. “Beyond that, things look tough. But that’s been the case many times in the past.” Preparing for the day they can’t add more transistors, chip companies are pouring billions of dollars into plotting new ways to use the existing transistors, instructing them to behave in different and more powerful ways. Intel, the world’s largest semiconductor company, predicts that a number of ‘highly speculative’ alternative technologies, such as quantum computing, optical switches and other methods, will be needed to continue Moore’s Law beyond 2020. “Things are changing much faster now, in this current period, than they did for many decades,” said Intel chief technology officer Justin Rattner. “The pace of change is accelerating because we’re approaching a number of different physical limits at the same time. We’re really working overtime to make sure we can continue to follow Moore’s Law.”

Transistors work something like light switches, flipping on and off inside a chip to generate the ones and zeros that store and process information inside a computer. The transistor was invented by scientists William Shockley, John Bardeen and Walter Brattain to amplify voices in telephones for a Bell Labs project, an effort for which they later shared the Nobel Prize in physics.

On December 16, 1947, Bardeen and Brattain created the first transistor. The next month, on January 23, 1948, Shockley, a member of the same research group, invented another type, which went on to become the preferred transistor because it was easier to manufacture.

Transistors’ ever-decreasing size and low power consumption made them an ideal candidate to replace the bulky vacuum tubes then used to amplify electrical signals and switch electrical currents. AT&T saw them as a replacement for clattering telephone switches.

Transistors eventually found their way into portable radios and other electronic devices, and are most prominently used today as the building blocks of integrated circuits, another Nobel Prizewinning invention that is the foundation of microprocessors, memory chips and other kinds of semiconductor devices.

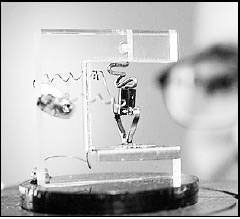

A replica of the first transistor at the Computer History Museum.